Install Kubernetes using kubeadm on Ubuntu

Kubernetes is a container orchestration engine which automates the deployment, scaling and management of your containerised cloud-native applications. I have used Kubernetes to deploy various applications in production on cloud and on-premise data centres.

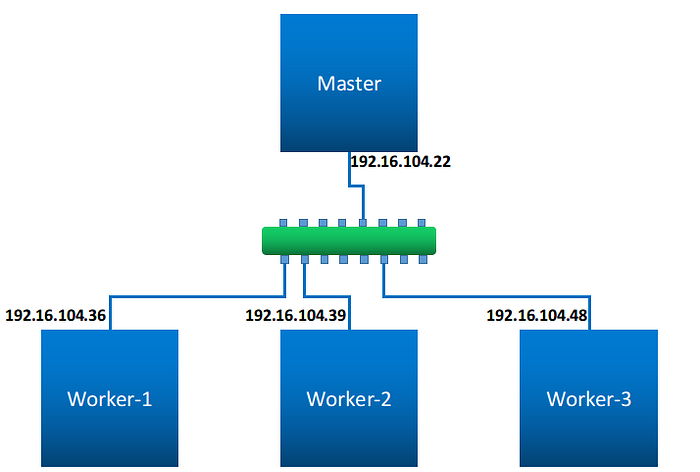

I will explain here how I deployed the Kubernetes on my on-premise data-centre VMs. I have used 4 Ubuntu VMs which are spawn on an OpenStack Environment. You can use any kind of virtualizations to create the VMs, such as OpenStack, VirtualBox, Vagrant or even physical devices itself can be used. Just make sure the nodes should have the IP reachability.

Master node: 1Nos. , 4GB RAM, 2 vCPU, 20GB HDD

Worker node: 3Nos. , 8GB RAM, 4 vCPU, 80GB HDD

It is not mandatory to use the above specifications always, minimum should be 2GB and 2vCPU. Even you can run a single-node Kubernetes cluster, later in this guide, I will explain how to do the same.

Networking

All the nodes I’m using have a single networking interface, and both the data and the control is been transferred on the same interface.

Installation

Perform the below commands on all your nodes, unless I mentioned.

First, update the system and make sure that it runs the latest packages.

$ apt-get update && apt-get upgradeThe mac address and product UUID of your nodes should be unique across each node. This id needs to be unique for Kubernetes to work properly. most of the cases this will be unique, but in some VM providers might have this issue. More details can be found here. Using the below commands you can check this in each node.

$ ip link

$ sudo cat /sys/class/dmi/id/product_uuidNext, we need a container runtime to run the containers in the node, so we will deploy the docker package in each node. For this, we will download a script from the docker site and execute it. This script contains the commands to download and install the docker. More details can be found here.

Warning: Always inspect the script downloaded before running it locally.

$ curl -fsSL https://get.docker.com -o get-docker.sh

$ sudo sh get-docker.shNow we will install the Kubernetes components. For this, we need to install some additional packages first and then need to add a key for downloading the Kubernetes package. After that, we will install the Kubernetes packages.

$ apt-get update && apt-get install -y apt-transport-https

$ curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | apt-key add -

$ cat <<EOF | sudo tee /etc/apt/sources.list.d/kubernetes.list

deb https://apt.kubernetes.io/ kubernetes-xenial main

EOF

$ apt-get update

$ apt-get install -y kubelet kubeadm kubectlOnce the above all commands are successfully finished, you have installed all packages required for Kubernetes. In the following session, we will see how to start the cluster.

Start Kubernetes Cluster

First, we will deploy the Kubernetes on the master node and then we will join the worker nodes to this master node to for a cluster.

$ kubeadm init --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=172.16.104.22Above command will start deploying a Kubernetes cluster on your master node. The “ — pod-network-cidr” option is to provide the CIDR of the networks to be used inside the Kubernetes, ideally, you don’t need to change this unless your VM network is falling under this range. And the “ — apiserver-advertise-address” option is the master IP address which worker nodes can be reached to form a cluster. In case if you are having multiple network interface you can use this option to control the IP to be used by the Kubernetes cluster.

$ kubeadm init --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=172.16.104.22

[init] Using Kubernetes version: v1.9.4

[init] Using Authorization modes: [Node RBAC]

[preflight] Running pre-flight checks.

[WARNING FileExisting-crictl]: crictl not found in system path

[certificates] Generated ca certificate and key.

[certificates] Generated apiserver certificate and key.

[certificates] apiserver serving cert is signed for DNS names [pna-kube-master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 172.16.104.22]

[certificates] Generated apiserver-kubelet-client certificate and key.

[certificates] Generated sa key and public key.

[certificates] Generated front-proxy-ca certificate and key.

[certificates] Generated front-proxy-client certificate and key.

[certificates] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[kubeconfig] Wrote KubeConfig file to disk: "admin.conf"

[kubeconfig] Wrote KubeConfig file to disk: "kubelet.conf"

[kubeconfig] Wrote KubeConfig file to disk: "controller-manager.conf"

[kubeconfig] Wrote KubeConfig file to disk: "scheduler.conf"

[controlplane] Wrote Static Pod manifest for component kube-apiserver to "/etc/kubernetes/manifests/kube-apiserver.yaml"

[controlplane] Wrote Static Pod manifest for component kube-controller-manager to "/etc/kubernetes/manifests/kube-controller-manager.yaml"

[controlplane] Wrote Static Pod manifest for component kube-scheduler to "/etc/kubernetes/manifests/kube-scheduler.yaml"

[etcd] Wrote Static Pod manifest for a local etcd instance to "/etc/kubernetes/manifests/etcd.yaml"

[init] Waiting for the kubelet to boot up the control plane as Static Pods from directory "/etc/kubernetes/manifests".

[init] This might take a minute or longer if the control plane images have to be pulled.

[apiclient] All control plane components are healthy after 268.001672 seconds

[uploadconfig] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[markmaster] Will mark node pna-kube-master as master by adding a label and a taint

[markmaster] Master pna-kube-master tainted and labelled with key/value: node-role.kubernetes.io/master=""

[bootstraptoken] Using token: 934abf.016f21c307d8c164

[bootstraptoken] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstraptoken] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstraptoken] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstraptoken] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: kube-dns

[addons] Applied essential addon: kube-proxyYour Kubernetes master has initialized successfully!To start using your cluster, you need to run the following as a regular user:mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/configYou should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/You can now join any number of machines by running the following on each node

as root:kubeadm join --token 934abf.016f21c307d8c164 172.16.104.22:6443 --discovery-token-ca-cert-hash sha256:6e7b43b1d54f5faee99786bdc3cd8fc766a5bc1e99def27801441053c5a40bc8$

Above is the execution output of the “kubeadm init” command. As you see in the output you will get two information from it. One is about how to configure the kubectl to connect to the cluster and other is the command to execute in the workers to connect to the master.

Note:- Don’t execute the “kubeadm join” command until I say.

Kubectl can be configured anywhere, such as your master, worker or in your laptop. Just make sure that you copy the /etc/kubernetes/admin.conf file to the $HOME/.kube/config location before using the kubectl command and ensure the IP reachability to the master.

I’m going to configure the kubectl in the master itself. So in the master node, I will execute the below command which I got from the kubeadm init output.

$ mkdir -p $HOME/.kube

$ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

$ sudo chown $(id -u):$(id -g) $HOME/.kube/configAfter this we can execute the kubectl commands to see, it is working or not.

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReadyNow that the commands are working fine, we will deploy the networking now, so that the worker nodes can connect to the master node easily. For networking, we can use any CNI(Container Networking Interface) providers such as flannel, calico, weave etc. I’m using flannel here as it is simple to deploy and use. More options can be found here.

# Apply below command on all nodes

$ sysctl net.bridge.bridge-nf-call-iptables=1# Apply this on master node

$ kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

Wait for few seconds and then start joining the worker nodes one by one using the command we got from the “kubeadm init” command in master.

$ kubeadm join --token 934abf.016f21c307d8c164 172.16.104.22:6443 --discovery-token-ca-cert-hash sha256:6e7b43b1d54f5faee99786bdc3cd8fc766a5bc1e99def27801441053c5a40bc8After some time you can check the status of the cluster nodes by using below command.

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready <none> 108d v1.14.1

worker-1 Ready <none> 108d v1.14.1

worker-2 Ready <none> 108d v1.14.1

worker-3 Ready <none> 108d v1.14.1That's it, your cluster is ready now. You can start deploying the applications.

Single node deployment

If you have only a single node, and you want to deploy Kubernetes for development purpose you can remove a special configuration in the master node and use it for application deployment. Kubernetes by default disabled the application deployment on master nodes for security reason, but we can remove this using the below command. I suggest don’t use this in the production environment.

$ kubectl taint nodes --all node-role.kubernetes.io/master-Reference

https://kubernetes.io/docs/setup/independent/create-cluster-kubeadm/

https://kubernetes.io/docs/setup/independent/install-kubeadm/